Defragmentation

What is Defragmentation and why do I need it?

Defragmentation, often referred to as “defrag,” is the process of reorganizing data on your hard drive. It brings together scattered pieces of data, aligning them in a continuous and efficient manner.

You could say that defragmentation is like cleaning house for your Servers or PCs, it picks up all of the pieces of data that are spread across your hard drive and puts them back together again, nice and neat and clean.

Defragmentation increases computer performance.

Revolutionizing “Defragmentation” for Modern Systems

Defragmentation has undergone a transformative evolution to address the challenges posed by today’s advanced computing environments. While file and free space fragmentation persist in Windows environments, the strategies to combat these issues and enhance system performance demand a specialized and contemporary approach.

As the creators of Diskeeper, hailed as the most renowned “disk defragmenter” in history, we’ve achieved this evolution! Modern technologies, such as SSDs, virtualized systems, and cloud integration, introduce multiple layers of I/O inefficiencies and bottlenecks. To tackle these challenges head-on, we proudly present DymaxIO® fast data performance software.

DymaxIO stands at the forefront of innovation, eliminating I/O bottlenecks and delivering a substantial boost to overall Windows performance. Our software is meticulously crafted to unleash the full potential of your Windows systems, promising accelerated application response times, seamless operations, and heightened productivity for individuals or entire organizations.

Embark on a transformative journey by downloading a free 30-day trial of DymaxIO. Supercharge your PC’s performance now »

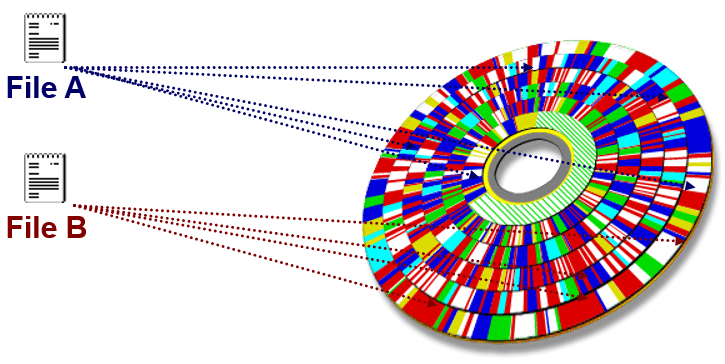

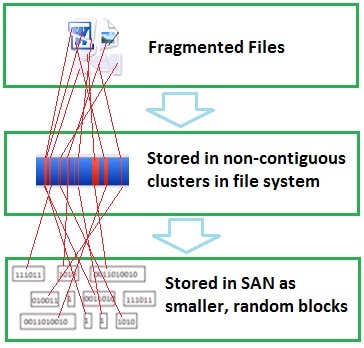

The Anatomy of Fragmentation

A natural byproduct of continuous file operations, fragmentation is an inherent facet of computing. To accommodate the creation, editing, and saving of files, they are broken into pieces to fit within the available spaces on the disk. As files and free space become fragmented, the efficiency of computer performance diminishes. However, within DymaxIO’s arsenal of optimization features lies a pivotal solution – the prevention of performance-degrading fragmentation before it takes root! Augmented by DymaxIO’s array of patented technologies, the result is a swift enhancement of Windows performance, surpassing even its pristine state, and a prolonged lifespan for your system!

Defragmentation and SSDs?

Traditional old-school defrag is a no-no for SSDs. However, it’s crucial to recognize SSD performance does degrade over time and requires specialized treatment. Learn the details about SSD performance degradation and shortened lifespan resulting from the way data is written into the small free spaces, causing write performance degradation of up to 80% to the solid-state storage device. Read here.

Assessing Fragmentation Problems

It’s a common misconception for users to blame computer performance problems on the operating system or simply think their computer is “old”, whereas the true culprit is often disk fragmentation or I/O inefficiencies.

Recognize the signs of fragmentation-related issues:

- Slow applications

- System crashes and freezes

- Slow backup times

- Reduced data transfer speeds

- Slow boot-up times

- and more…

If your Windows systems are just giving you trouble, run a free 30-day trial of DymaxIO and see if it solves it. You might be pleasantly surprised, saving both the cost and effort typically associated with troubleshooting!

Defragmentation Performance Gains

Say goodbye to old-school defrag and embrace the power of optimization technologies that proactively prevent fragmentation, inefficient I/O operations, and intelligently cache hot reads. Experience a multitude of benefits, including:

- Blazing fast application performance

- Reduced timeouts and crashes

- Improved throughput and efficiency

- Extended hardware lifecycle

- Get more work done in less time

- Plus much more!

Get these benefits and more! See for yourself with a free 30-day trial of DymaxIO here.

SQL Server Performance and Fragmentation

Among enterprise applications, SQL Server applications stand out as the most I/O intensive, making them susceptible to performance degradation. Implementing strategies to minimize the required I/O for SQL tasks can significantly enhance the server’s overall performance for the application, offering a key advantage for database administrators.

Get the details here Get the Most Performance From Your SQL Server

SAN, NAS, RAID and Fragmentation

Email Servers and Fragmentation

Fragmentation and Defragmentation Testing and White papers

Selecting an Effective Defragmentation Solution

While traditional defragmentation methods may have once been standard practice, modern Windows systems require a more sophisticated approach to combat fragmentation effectively. Despite hardware upgrades, the accumulation of fragmented data on a computer’s storage device can significantly degrade system performance, leading to slowdowns and potential crashes. So, what’s the best solution in today’s landscape?

The most efficient and contemporary approach to addressing fragmentation is real-time prevention. This capability is essential for sustaining peak system speed and reliability, regardless of the system’s workload.

Rather than relying on traditional “defragmentation tools,” today’s systems necessitate solutions that seamlessly prevent fragmented data from being written to the storage device. It’s crucial that these solutions operate without monopolizing system resources during the process.

When selecting a performance optimization solution, prioritize one that is trusted, reliable, and certified to ensure effectiveness and provide peace of mind.

IntelliWrite® Fragmentation Prevention Technology

Historically, fragmentation has been addressed proactively, after it has already happened through the defragmentation process. In the “early days”, fragmentation was addressed by transferring files to clean hard drives. Then manual defrag programs were introduced. The next step was scheduled defragmenters with varying degrees of automation. Truly automatic defrag was finally achieved with the development of InvisiTasking® technology by Condusiv Technologies in 2007.

However, in spite of all the progress made with defrag methods, when fragmentation occurs, the system is wasting precious I/O resources by writing non-contiguous files to scattered free spaces across the disk and then secondly, using more I/O resources to defrag. Clearly the best strategy is to prevent the problem before it happens in the first place and always work with clean, fast storage.

Based on knowledge of the Windows file system, IntelliWrite technology controls the file system operation and prevents the fragmentation that would otherwise occur.

Key Features:

- Significantly improves system performance above the levels achieved with automatic defragmentation alone.

- The improvement will be particularly significant for busy servers / virtual systems on which background/scheduled defragmentation has limited time slots in which to run. In extreme cases this can make a difference between being able to eradicate fragmentation or not.

- Substantially prevents file fragmentation before it happens, up to 85% or more.

- Can be enabled / disabled per individual volumes.

- Can be run in coordination with automatic defragmentation (strongly recommended for optimal performance), or independently.

- Supports NTFS and FAT file systems on Microsoft Windows operating systems.

- Overall lower system resource usage and consequently lower energy consumption.

Key Benefits:

- Prevents most fragmentation before it happens

- Improves file write performance

- Saves energy at the same time that improves performance (5.4% in controlled tests)

- Compatible and interoperable with other storage management solutions

IntelliWrite reduces drastically the effects of fragmentation on any system running in the Windows Operating System by preventing the fragmentation before it happens. This will automatically represent an improvement in system speed, performance, reliability, stability and longevity.

InvisiTasking® background processing

InvisiTasking was coined from “invisible” and “multitasking”, and this amazing technological breakthrough promises to change the way the world operates and maintains their computer systems. InvisiTasking allows computers to do something that has never been done before – to run at maximum peak performance, continuously, without interfering with system performance or resources – even when demand is at its highest!

InvisiTasking allows Condusiv’s software to eliminate fragmentation on the fly, in real time, so that fragmentation never has a chance to interfere with the system. Best of all, InvisiTasking does this automatically, without the need for any input from the user – regardless of the size of the network. Whether it’s just one PC or thousands just install Condusiv and the software will take care of the rest!

It’s important to note that InvisiTasking is far more advanced than any previous low priority I/O approaches that do “I/O throttling” in an effort to reduce resource conflict. InvisiTasking, through the use of its advanced technology, goes beyond just I/O in order to address system resource u sage using a pro-active approach. InvisiTasking checks to make sure the operation that occurs takes place invisibly, with true transparency while running in the background.

sage using a pro-active approach. InvisiTasking checks to make sure the operation that occurs takes place invisibly, with true transparency while running in the background.

Solution to Eliminate Fragmentation and Speed up Computer Systems

Diskeeper has been increasing PC and Server performance by eliminating and preventing fragmentation for millions of global customers for decades. Diskeeper also includes caching technology for faster-than-new computer performance.

All of Diskeeper’s features and functionality are now included in DymaxIO.

DymaxIO is the most cost-effective, easy, and indispensable solution for fast data, increased throughput, and accelerated I/O performance so systems and applications run at peak performance for as long as possible.

To learn more visit condusiv.com/dymaxio

ing fragmentation or defragmenting any other system. It simply takes less time and system resources to access a contiguous file than one broken into many individual pieces. This improves not only response time but also the reliability of the system. Thorough database maintenance requires a combination of disk defrag and the email server utilities (internal record/index defragmentation), to achieve optimum performance and response time.

ing fragmentation or defragmenting any other system. It simply takes less time and system resources to access a contiguous file than one broken into many individual pieces. This improves not only response time but also the reliability of the system. Thorough database maintenance requires a combination of disk defrag and the email server utilities (internal record/index defragmentation), to achieve optimum performance and response time.